Many system administrators seem to have problems with the concepts of pipes and redirection in a shell. A coworker recently asked me how to deal with log files. How to find the information he was looking for. This article tries to shed some light on it.

Input / Output of shell commands

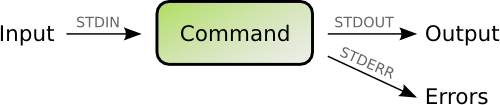

Many of the basic Linux/UNIX shell commands work in a similar way. Every command that you start from the shell gets three channels assigned:

- STDIN (channel 0):

Where your command draws the input from. If you don’t specify anything special this will be your keyboard input. - STDOUT (channel 1):

Where your command’s output is sent to. If you don’t specify anything special the output is displayed in your shell. - STDERR (channel 2):

If anything wrong happens the command will send error message here. By default the output is also displayed in your shell.

Try it yourself. The most basic command that just passes everything through from STDIN to STDOUT is the ‘cat’ command. Just open a shell and type ‘cat’ and press Enter. Nothing seems to happen. But actually ‘cat’ is waiting for input. Type something like “hello world”. Every time you press ‘Enter’ after a line ‘cat’ will output your input. So you will get an echo of everything you type. To let ‘cat’ know that you are done with the input send it an ‘end-of-file’ (EOF) signal by pressing Ctrl-D on an empty line.

The pipe(line)

A more interesting application of the STDIN/STDOUT is to chain commands together. The output of the first command becomes the input of the second command. Imagine the following chain:

The contents of the file /var/log/syslog are sent (as input) to the grep command. grep will filter the stream for lines containing the word ‘postfix’ and output that. Now the next grep picks up what was filtered and filter it further for the word ‘removed’. So now we have only lines containing both ‘postfix’ and ‘removed’. And finally these lines are sent to ‘wc -l’ which is a shell command counting the lines of some input. In my case it found 27 of such lines and printed that number to my shell. In shell syntax this reads:

cat /var/log/syslog | grep 'postfix' | grep 'removed' | wc -l

The ‘|’ character is called pipe. A sequence of such commands joined together with pipes are called pipeline.

Useless use of ‘cat’

Actually ‘cat’ is supposed to be used for concatenating files. Like “cat file1 file2”. But some administrators abuse the command to put something into a pipeline. That’s bad style and the reason why Randal L. Schwartz (a seasoned programmer) used to hand out virtual “Useless use of cat” awards. Shell commands usually can take a filename as the last argument as an input. So this would be right:

grep something /var/log/syslog | wc -l

While this works but is considered bad style:

cat /var/log/syslog | grep something | wc

Or if you knew that grep even has a “-c” option to count lines the whole task could be done with just grep:

grep -c something /var/log/syslog

Using files as input and output

Output (STDOUT)

Instead of using the console for input and the screen for output you can use files instead. While

date

shows you the current date on the console you can use

date >currentdatefile

to redirect the output of the command (STDOUT) to the file named ‘currentdatefile’.

Input (STDIN)

This also works as input. The command

grep something

will search for the word ‘something’ in what you type on your keyboard. But if you want to look for ‘something’ in a file called ‘somefile’ you could run

grep something <somefile

Input and output

You can also redirect both input and output in the same command. A politically incorrect way to copy a file would be

cat <oldfile >newfile

Of course you would use ‘cp’ for that purpose in real life.

Errors (STDERR)

So far this covers STDIN (<) and STDOUT (>) but you also redirect the STDERR channel by using (2>). An example would be

grep something <somefile >resultfile 2>errorfile

2>&1 magic

Many admins stumble when it comes to redirecting one channel to another. Say you want to redirect both STDOUT and STDERR to the same file. Then you cannot do

grep something >resultfile 2>resultfile

It will only redirect the STDOUT (>) there and keep the ‘resultfile’ open so “2>” fails to write to it. Instead you need to do

grep something >resultfile 2>&1

This redirects STDOUT (1) to the ‘resultfile’ and tells STDERR (2) to send the output to what STDOUT is set to (also ‘resultfile’).

What does not work is this order:

grep something 2>&1 >resultfile

It may look right to us humans but in fact does not redirect STDERR to the ‘resultfile’. The explanation: the shell interprets this line from left to right. So first the “2>&1” is evaluated which means “send STDERR to the same that STDOUT is currently set to”. As STDOUT is usually just printed to the shell it will send STDERR also to the shell. Next the shell finds “>resultfile” which sends STDOUT to the ‘resultfile’ but does not touch the previous destination of STDERR. So STDERR output will still end up in the shell.

Interesting commands

- grep

Filters out lines with certain search words. “grep -v” searches for all lines that do not contain the search word. - sort

Sort the output alphabetically (needs to wait until EOF before doing its work). “sort -n” sorts numerically. “sort -u” filters out duplicate lines.usel - wc

Word count. Counts the bytes, words and lines. “wc -l” just outputs how many lines were counted. - awk

A sophisticated language (similar to Perl) that can be used to do something with every line. “awk ‘{print $3}'” outputs the third column of every line. - sed (stream editor)

A search/replace tool to change something in every line. - less

Useful at the end of a pipe. Allows you to browse through the output one page at a time. (“less” refers to a similar but less capable tool called “more” that allowed you to see the first page and then press ‘Space’ to view ‘more’.) - head

Shows the first ten lines only. “head -50” shows the first 50 lines. - tail

Shows the last ten lines only. “tail -50” shows the last 50 lines. “tail -f” follows a certain file.

Very good explanation indeed

I stumbled upon this site while after getting more confused about redirections. Great explanation.

Thanks for your nice article.

You describe redirection of STDOUT and STDERR to files and also piping of STDOUT. I would be interested the following:

Let us say we have to commands, C1 and C2. I want to pipe STDOUT of C1 to STDOUT of the whole thing and STDERR of C1 to STDIN of C2 and then STDOUT and STDERR of C2 again to STDERR. This should be used for things like filtering STDERR with grep.

Do you know how to do this?

The order is important here!

Thanks for that, Anon.

The solution I was looking for is not as complex but works as I needed, is:

C1 2>&1 | C2 > outfile.txt

It is a very good tutorial!!

Thanks!!

I agree. It should be much higher in the Google search results. For some reason mediocre introductions to pipes and redirection are more popular. I have to dig down to find treasures like this post. Thanks, Mr. Haas!

Good tutorial, thanx. One small remark: using "wc -l" in combination with "grep" is bad practice too.

Instead of typing:

grep something /var/log/syslog | wc -l

It would be better (i.e. faster on big files) to use:

grep -c something /var/log/syslog

Many thanks, Christoph!

This is a really clear article on the subject, in content and layout, and helped me to solve a problem that I was about to abandon as a waste of time.

Say you want to redirect both STDOUT and STDERR to the same file.

This can be more easily be achieved like this

grep something &> resultfile

thus resultfile contains both STDOUT and STDERR, there is no need to complicate it further.

This is a BASH only way. It is no POSIX. It won’t work with Korn Shell, for example.

hi very good tutorial but can u help me in this

i want to pipe my mails to a script how can that be done

thanks

Where C1=yourscriptname.sh

$ mail | C1

That may appear quite simple, unclear. Without knowledge of your computer’s / system’s email system and programs, one can not really delve further in aiding. Note though, you may find a solution in sendmail or postfix.

I would fathom any such progress could be found from:

$ man sendmailor$ man postfix.You may even try

$ man mailto accquire better familiarity with your email system. I do know Debian includes Exim 4, I believe, as its email system. Only suggest Debian as it is what I currently use / abuse and prefer. I’m sure other distrobutions arrange their own variety of email systems, in a similar fashion, although may offer slight variations.Unix/Linux is all Unix/Linux though as far as the basic nuts and bolts are concerned, and liberal use of the man command is often a helpful resource. Of course, some of us have yet to heed our own preaching. *chuckle* 😉

Ding dong… first of all congratulations for this amazing content and domain name! My co-workers were afraid when they got me surfing around the “workaround.org” site… =]

One of the guys here asked me to get the cd command to work with a pipe, something like this:

echo “/tmp” | cd

and then I just forward this question to you… why the command above doesn’t works?

Thank you. The reason that your command does not work is that the "cd" command does not expect any input (from STDIN). "cd" expects the directory that you want to go to as the first argument. What you could do:

cd `echo /tmp`

The backticks evaluate the echo command so that you get "cd /tmp".

Thank you

Thank you

Thank you.

AIX problem with cat file | program. There appears to be a scheduling conflict where the program is ran before the cat can fill the pipe. This results in very strange behavior in my program. The same file same program will randomly work/fail depending on how each process is scheduled.

The solution was to redirect program < file and that removed the intermittent failures. I can duplicate this all the way up to AIX 7.1 where as SuSe, Red Hat did not have these failures. Test same data, same program on all platforms.

Najs 🙂

nice intro

nice

grep -il “diskview -j” *-Report* | more -R ; grep -A50 -ih “diskview -j” *-Report* | more -R

I want it to do both greps on the first *-Report* file and then move to the next report file so that i get the file that he diskview came from and then the next 50 lines after diskview -j.

I am also trying to figure out how to get both greps to show up in a | less

Is this possible ?

Thanks for the nice explanation, the part of

grep something >resultfile 2>&1

Is something that confused me a lot, so if I want to pipe into resultfile, per say another script, how would that look at the end

Please expand on the use of >>. I am sure I cannot just add an extra > to all your examples to successfully append to the receiving file.

Thanks

Thank you! your work is still being put to good use!